SOTA ASR Tooling: Long-form Transcription

Benchmarking the different Whisper frameworks for long-form transcription

Overview

OpenAI’s whisper is currently the state-of-the-art model for automatic speech recognition (ASR). Since its release in November 2022, a growing community of frameworks have been built on top of it. One area where there has been significant contribution from the open source community is long-form transcription. In this blog post, I will share the results of my experiments benchmarking the most common whisper frameworks.

The Benchmark

The task is to accurately and efficiently transcribe a relatively long audio file. I chose the The State of GPT by Andreij Karpathy as our test set. It is over 42 minutes so it’s long enough for our benchmark without requiring long gpu-time to transcribe.

I have manually curated the label transcript by listening to the audio and correcting the attached closed caption.

We will calculate the following metrics:

wer (%) - Used for measuring the accuracy of an ASR model’s predictions

latency (seconds or minutes) - Used for measuring the efficiency of an ASR model.

Note: We will be using whisper-large-v2 in these benchmarks unless stated otherwise.

Long-form Transcription

Whisper is an encoder-decoder transformer that converts speech to text. It has demonstrated excellent accuracy and robustness across many languages. However, whisper was trained on audio segments that have a duration of 30 seconds or less. As a result, whisper can’t transcribe any audio file that is longer than this duration (think podcasts, audio books, YouTube videos, lectures) out of the box.

The open source community has come up with several algorithms that allows us to utilize whisper to transcribe long audio files. We’ll cover the most common ones in the following section.

1. OpenAI’s Sequential Algorithm

Many algorithms have been developed to utilize whisper for transcribing such long audios. One of these is the algorithm that was developed by the OpenAI whisper team and is described in the paper.

The algorithm has the following steps:

Run whisper on the first 30 seconds of the audio and get its predictions in timestamp mode.

If whisper predicts an ending timestamp for the last text chunk, we shift the audio buffer to the next 30 seconds. Otherwise, we shift the audio buffer to start from the beginning timestamp of the last text chunk.

We then feed the new audio chunk and we pass the predicted transcript of the previous segment as a prompt to whisper’s decoder.

\(pred_2 = whisper(audio=x_2, prompt=pred_1)\)Again, if whisper predicts an ending timestamp for the last text chunk, we shift the audio buffer to the next 30 seconds. Otherwise, we shift the audio buffer to start from the beginning timestamp of the last text chunk.

Repeat the previous steps until we’ve gone through the entire audio file.

This algorithm is sequential; we need the model’s prediction of one segment before we can move onto the next segment. Therefore, we can’t utilize the parrallel computing capabilities of modern GPUs by batching.

It’s already implemented in the official whisper package. After running the benchmark, we get the following result:

2. Huggingface Transformers ASR Chunking Algorithm

The automatic speech recognition pipeline from the huggingface transformers package uses a different long form transcription algorithm.

Instead of sequentially transcribing 30-second segments and shifting the audio buffer, here we segment the audio into 30-second chunks with 5 second of overlap. The algorithm is described in more details in this blog post.

After running the benchmark with the model loaded in float32 precision and a batch size of 1, we got the following result:

Batching

The main advantage of this algorithm is we don’t require the preceding segment’s prediction; we can process each chunk independently. This allows us to utilizing the GPU’s computing capabilities by batching multiple segments in one forward pass.

Batch size of 8

If we increase the batch size to 8, we notice the vram usage increases and the latency significantly goes down without affecting the accuracy. Here are the results:

Quantization

In machine learning, quantization is the process of reducing the number of bits needed to store the model’s parameters by reducing their precision. The most common precision for models is float32. This uses 4 bytes, or 32 bits, to store each parameters.

Float16

For large models with billions of parameters, we can reduce the precision of the parameters without sacrificing much accuracy. In fact, the most common precision for LLMs is float16. It uses 2 bytes, or 16 bits, to store each parameter. This results in a smaller model in terms of storage (3.2GB vs 6.4GB) but also reduced computation in each forward pass since we now multiply numbers with 16 bits instead of 32.

If we run whisper in float16 precision and a batch size of 1 using the transformers framework, we get the following results:

A significant reduction in vram! This means we can run whisper on a much smaller GPU (provided it supports float16 computations). Also, there’s no drop in accuracy compared to float32. Finally, the latency is roughly the same as float32. This is probably because T4 isn’t designed for float16 computations. More recent GPUs may have more noticeable improvement in latency.

Float16 + Batch size of 8

Now let’s try batching with float16:

This time we get a significant drop in latency at a small cost of increased vram usage.

Can we squeeze even more performance? Let’s try a batch size of 16.

Float16 + Batch size of 16

We get a further drop in latency. While in our case it was very small (2.04 m vs 2.14 m), for longer audio files that are multiple hours it might be worth it.

Now we still have some unused vram, so let’s try a batch size of 32!

Float16 + Batch size of 32

We get a very small drop in latency at the cost of increased vram usage. It’s probably not worth it to increase the batch size from 16 to 32.

3. BetterTransformers

The concept of BetterTransformers was introduced in pytorch 1.12. It is a more efficient implementation of the transformer architecture which utilizes fused kernels and efficient attention implementation like FlashAttention and ScaledDotProductAttention (SDPA). It is integrated in the huggingface ecosystem through the optimum library. Since our T4 GPU doesn’t support FlashAttention2, we are limited to SDPA.

We can load our model in the BetterTansformers format as follows:

import torch

from transformers import pipeline

from transformers.utils import is_flash_attn_2_available

pipe = pipeline("automatic-speech-recognition",

"openai/whisper-large-v2",

torch_dtype=torch.float16,

device="cuda:0",

model_kwargs={"attn_implementation": "flash_attention_2"} if is_flash_attn_2_available() else {"attn_implementation": "sdpa"},)Now let’s run the model and see the results

vram: 8.2 GB

latency: 2.04 minutes

wer: 16.2

cer: 10

These are the same results as the native transformer! What’s happening there?

Well, after reading the docs, it turns out SDPA is natively integrated into the transformers library for whisper. So we’ve already been using it all this time!

4. Faster-Whisper

Faster-Whisper utilizes the CTranslate2 implementation of whisper. CTranslate2 is a machine learning inference library written in c++ with a python API. It was initially used for machine translation models but it grew to include other transformer architectures like whisper and Llama2.

It uses an efficient implementation of transformers on cuda-capable gpus by utilizing batching, kernel fusion and native c++ implementation.

Let’s see how it performs:

Well, looks like it has the performance of HF transformers while maintaining the accuracy of openai-whisper!

This means we can use it on small GPUs while getting high transcription accuracy.

However, faster-whisper doesn’t support batching unfortunately.

5. WhisperX

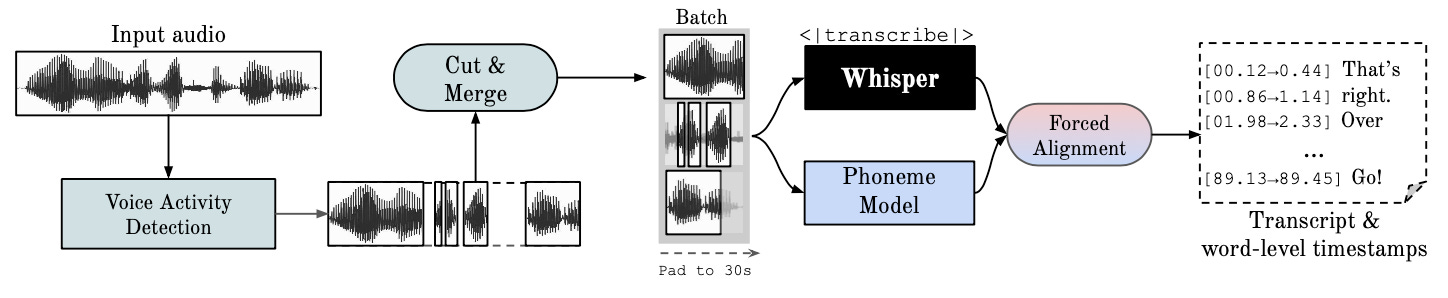

Introduced in Time-Accurate Speech Transcription of Long-Form Audio, WhisperX is a new proposed algorithm for transcribing long audios using whisper. The algorithm is implemented as an open source python package.

Since its introduction, it has seen many improvements including a batching mechanism that utilizes batching on the CTranslate2 backend. It relies on a voice activity detection (VAD) model to segment the audio into chunks that are shorter than 30 seconds. Notably, it doesn’t use the predicted text from the previous segment as a prompt for the next segment as they mention it causes hallucination. This means we can process each segment independently and can therefore batch multiple segments in one forward pass.

Float16 + Batch size of 1

First of all, let’s trying with float16 and a batch size of 1:

Wow, we actually get an increased transcription accuracy. It’s the best so far.

Also, the performance (vram and latency) is very similar to faster-whisper. This is expected since they use the same backend, namely CTranslate2.

Float16 + Batch size of 16

Now, let’s try batching:

This is impressive. We get the highest accuracy (lowest wer & cer) and also the best performance (lowest latency with a reasonable vram).

Float16 + Batch size of 32

Let’s try an even bigger batch

We get a slight reduction in latency at the cost of increased vram usage. It’s probably not worth it to increase the batch size from 16 to 32.

Beam Search

Since WhisperX has the highest accuracy so far, let’s try beam search to see how it affects it:

Looks like beam search made it less accurate!

6. whisper.cpp

whisper.cpp is native implementation of whisper in pure C/C++. It was actually Georgi Gerganov’s first project in the “.cpp” series and was the foundation for building llama.cpp.

It’s main selling point is that it’s a hacky implementation of models in pure C/C++ without any external dependency. It first started out as an educational project by GG but it soon picked up a lot of traction in the open source ML community and is now one of the largest open source projects in the field of ML.

whisper.cpp has a lot of features and tools around the base whisper model. They offer a cli, pre-built docker container, streaming mode with speculative decoding and long-form transcription.

It’s mainly targets the Apple Mac ecosystem but it also has cuda support (and I believe there are efforts for integrating other GPU backends like OpenCL and RoCm).

I’ve cloned and built the project with cuda flags and ran the inference using the following snippet on Colab:

# Clond repo

!git clone https://github.com/ggerganov/whisper.cpp

%cd /content/whisper.cpp

# Install g++ (C++ compiler)

!apt-get install g++

# Download the model

!bash ./models/download-ggml-model.sh base.en

# Compile with cuda support

!make clean

!WHISPER_CUBLAS=1 make -j

# Run inference on wav file and save output to txt

!./main -otxt -f audio_file.wavHere’s the first 10 lines:

[MUSIC PLAYING]

Please welcome AI researcher and founding member of OpenAI,

Andrej Karpathy.

[APPLAUSE]

Hi, everyone.

I'm happy to be here to tell you about the state of GPT,

and more generally, about the rapidly growing ecosystem

of large language models.Interestingly, it not only transcribe the speech, but it also predicted other sounds like music playing and applause. This can be very useful for real applications.

However, for our benchmarks, it may result in worse metrics since our label only contains the speech. So let’s clean this up and run the evals:

Well, it’s accuracy is very high, almost on par with WhisperX. However, its efficieny lags behind as it uses higher vRAM and has higher latency. This is expected since this project is targeting cpu and Mac ecosystems instead of efficient GPU implementation.

7. Other models

A few wonderful suggested I should also try out other checkpoints of whisper to see how they compare to large-v2. Let’s try out large-v3 and distill-large-v2 using WhisperX

large-v3

Using a batch size of 16, we get the following results:

vram: 8.5 GB

latency: 1.2 minutes

wer: 11.556163701952979

cer: 3.985053253378681

So it’s slightly less accurate than large-v2

distill-large-v2

Using a batch size of 16, we get the following results:

vram: 5.6 GB

latency: 0.77

wer: 25.46

cer: 15.23

Ouch! While it’s much fatser, there's a huge drop in accuracy compared to large-v2 and large-v3!

Examining the model's prediction, looks like it hallucinated on more than one occasion:

Typically, for example, you could use something like biparinecoding, which iteratively merges little text chunks and groups them into tokens. And so here I'm showing some example chunks of these tokens, and then this is the raw integerger integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer integer in the the Now,

Let's see if we can squeeze more performance out of it by increasing the batch size

distill-large-v2 batch size of 32

vram: 8.9 GB

latency: 0.75 minutes

wer: 25.58

cer: 15.3

We see a significant increase in the vram used with no improvement in latecny. Furthermore, there’s a tiny drop in accuracy.

Closing Remarks

Whisper is a defining step in the field of speech-to-text. In this blog post, we embarked on a journey to explore some of the most common tools built on top of it. The results are summarized in the following table:

And here they are as a csv file:

"Model

(precision, batch size)","Latency

(minutes)","vram

(GB)","Efficiency

100/(latency*vram)","WER

(%)","CER

(%)"

OpenAI Whisper,10.8,9.7,1.0,10.9,4.3

Transformers (fp32 | B=1),6.9,7.6,1.9,16.2,10.0

Transformers (fp32 | B=8),4.6,11.0,2.0,16.2,10.0

Transformers (fp16 | B=1),6.6,3.6,4.2,16.2,10.0

Transformers (fp16 | B=8),2.1,5.7,8.2,16.2,10.0

Transformers (fp16 | B=16),2.0,8.2,6.0,16.2,10.0

Transformers (fp16 | B=32),2.0,13.2,3.8,16.2,10.0

FasterWhisper (fp16),2.6,3.5,11.1,12.0,4.7

whisper.cpp,4.2,4.6,5.2,10.4,3.5

WhisperX (fp16 | B=1),2.7,3.9,9.6,10.0,3.6

WhisperX (fp16 | B=16),1.2,7.6,11.0,10.0,3.6

WhisperX (fp16 | B=32),1.2,11.5,7.4,10.0,3.6

WhisperX (beam5 | B=32),1.8,8.5,6.5,10.7,3.9

WhisperX (fp16 | B=16 | large-v3),1.2,8.5,9.8,11.6,4.0

WhisperX (fp16 | B=16 | distill-large-v2),0.8,5.6,23.2,25.5,15.2

WhisperX (fp16 | B=32 | distill-large-v2),0.8,8.9,15.0,25.6,15.3These experiments were performed using nvidia T4 gpu on kaggle. Here’s the notebook used in case you want to reproduce it: sota-asr.

We found that WhisperX is the best framework for transcribing long audio files efficiently and accurately. It’s much better than using the standard openai-whisper library.

However, this is by no means an exhaustive study. We should keep the following points in mind:

Even though our audio file is relatively long (40+ minutes), it’s still just one data point. A more pragmatic study would use 20+ audio files from different sources and different speaking styles.

These results are valid as of 2024-03-20; this field is rapidly changing and these results can quickly become outdated.