Decoding Whisper: An In-Depth Look at its Architecture and Transcription Process

Part 2 of a multi-part series in which we delve deep into Whisper, OpenAI's state-of-the-art automatic speech recognition model

Overview

In the first part of the series, we talked about the data curation and preparation process for developing whisper. In this part, we will discuss the architecture of the model and how whisper converts audio segments into text.

The Architecture

A plain old seq2seq encoder-decoder transformer

While developing whisper, the focus was mainly on studying the capabilities of large-scale supervised pre-training for speech recognition. For this reason, the authors decided to stick to a tried and tested off-the-shelf architecture to avoid confounding findings with model improvements.

More specifically, they chose an encoder-decoder transformer similar to the one presented in Attention is All You Need (Vaswani et al., 2017); this architecture has been studied extensively and is validated to scale reliably.

The Audio Processor

Before entering the encoder, the audio segment has to be processed first. The audio processor expects a single-channel audio array with a maximum duration of 30 seconds and a sampling rate of 16,000 Hz. It converts the audio segment to a log-mel spectrogram of 25-millisecond windows with a stride of 10 milliseconds1. This takes the signal from the time domain to the frequency domain.

The Encoder

The first part of the model is the encoder. This is the part that’s responsible for processing the audio and extracting a good representation of what’s spoken in the audio segments. It consists of the following pieces:

Stem: 2xconv1d + GELU (This is equivalent to a semantic embedding layer in a LLMs)

Positional Embedding: Whisper uses sinusoidal positional embedding

Encoder Blocks: A standard encoder block consisting of multi-headed self attention and feed forward layer with layer normalization and GELU activation.

The Decoder

The second and final part of the model is the decoder. This is the part that’s responsible for generating the text (for transcription and translation). Whisper uses a pretty standard decoder that can be found in many encoder-decoder transformers (e.g. T5). It consists of the following pieces:

Semantic Embedding: A pretty standard embedding layer. The vocabulary size is 51865.

Positional Embedding: Whisper uses learned positional embedding for the decoder.

Decoder Blocks: A standard decoder block consisting of multi-headed self (causal, since this is a decoder) attention, feed forward layers and cross-attention layers with layer normalization and GELU activation.

The Tokenizer

The tokenizer is responsible for converting tokens into integers and vise versa. It converts the sequence of predicted tokens (e.g. [5674, 87462, 01212] into text (e.g. “The cat sat on the mat”). It also splits the text into tokens for training and evaluation. Finally, it adds the special tokens like the beginning-of-sequence (BOS) token and end-of-sequence (BOS) token.

The journey of an Audio Segment

It can be difficult to actually grasp how whisper works under the hood. I would like to explain this by walking through the journey the audio goes on before we obtain the predicted text. This helps cement the details of the architecture and the function of each piece.

Outside the Model

1. Audio Preprocessing

The whisper encoder expects a single channel audio array at a sampling rate of 16,000 and duration of exactly 30 seconds. To obtain this array, we do the following steps:

Convert the audio to be mono-channel.

Resample the audio to 16kHz.

Pad (if <30s) or truncate (if >30s) the audio to exactly 30s.

The output of this steps is an array of 480,000 (16,000 samples per second * 30 seconds) floating point values.

2. Audio Processing

The second step is to send this array to the whisper processor which first creates the log-mel spectrogram of 25-millisecond windows with a stride of 10 milliseconds and then re-scales it to be between -1 and 1 with approximately zero mean across the pre-training dataset. This is gives us the features that are passed to the whisper model.

Inside the model

1. Encoding the audio

Pass the input features through the stem (2xconv1d + GELU) to obtain semantic embedding of the audio.

Add sinusoidal positional embedding.

Pass the result as input to the first encoder block.

Run through encoder blocks one by one, where the output of the first encoder is the input to the second encoder and so on.

The output of this step is a latent representation of the audio. This is passed into the cross-attention layers in the decoder.

2. Decoding the text

Obtain the sequence of predicted tokens so far. (At the beginning, this is an empty sequence)2

Pass this sequence to the token embedding layer to obtain semantic embedding of the text.

Add positional embedding

Pass the result as input to the first decoder block.

Pass the encoding of the audio to the cross-attention layer.

Run through the decoder blocks one by one, where the output of the first block (plus the audio encoding) is the input to the second block and so on.

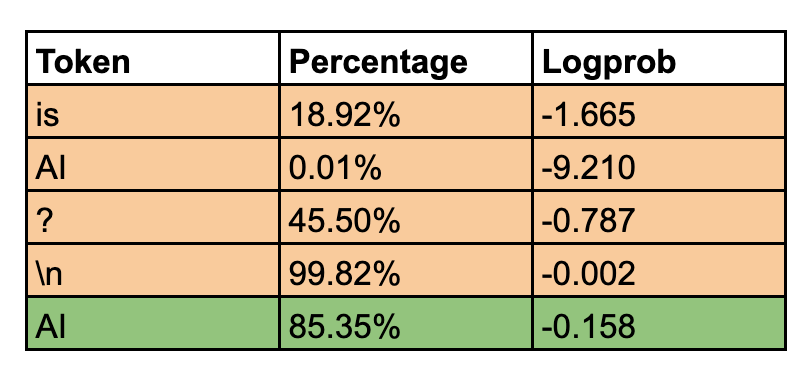

The output of this step are the logits for each token in the vocabulary. We can pass these logits to a softmax layer to obtain a probability distribution over all tokens in the dictionary as pictured below.

3. Token Sampling

So far, we don’t have predicted text yet. We now only have a (predicted) probability distribution over all tokens in the vocabulary. So how do we go from this probability distribution to generated text? The answer is token sampling!

Since this is an ordinary probability distribution, we can just sample from it. There are different methods for sampling tokens, but the most obvious one is to just pick the token with the highest (predicted) probability.

Okay, we now have a predicted token. But how do we actually obtain the entire predicted text and not just one token?

To obtain the full text, we do the following:

Add the predicted token to the sequence of predicted tokens

Pass this sequence (alongside the audio encoding) to the decoder.

Obtain a probability distribution over all tokens.

Sample one token from this distribution.

Repeat steps 1-4

Now we have a sequence (list) of predicted tokens (e.g. [2345, 12314, 879, 12313] . We can pass them into the tokenizer which converts them from integers to strings and stitches them back to obtain the predicted text (e.g. “The cat sat on the mat”).

And with this, our journey comes to and end! If you’re a bit lost, here’s a quick recap:

We started with an audio segment

We pre-processed it by padding (or truncating) to 30s.

We processed it to obtain features.

We passed the features to the encoder to obtain audio encoding.

We passed the audio encoding to the decoder to obtain the predicted text (by sampling from the decoder outputs).

Recap

If you made it to this part, you might a need a big picture of what happened. Here's a simplified recap of this blog post.

Data and scaling

The focus of the whisper project was on scaling the training on massive amounts of data. They used an off-the-shelf architecture to avoid confusing the findings with model improvement.

Architecture: plain old seq2seq encoder-decoder architecture

It consists of an encoder with a steam and multiple blocks alongside a decoder with cross-attention layers. There’s an audio processor for obtaining input features from an audio segment.

Journey of an Audio Segment

Start with an audio segment

Pre-process it by padding (or truncating) to 30s.

Process it to obtain features.

Pass the features to the encoder to obtain audio encoding.

Pass the audio encoding to the decoder to obtain the predicted text (by sampling from the decoder outputs).

Key Insights and Follow-up

It looks like the main improvement in whisper’s accuracy and robustness came from the scale and quality of the data rather than novel architecture choices.

This is actually very inspiring as it means we can use the transformer architecture for many tasks and it will probably work if we can curate the training data properly.

The downside, however, is that there are no “free” gains in accuracy by simply tweaking the architecture while maintaining the same size of training data and compute.

This is the general theme of the late 2010s and early 2020s: scale is key!

If you found this explanation of the model’s architecture and how it works under the hood, stay tuned for part 3 where we discuss the multitask format of Whisper and how we control the language and style of the output text!

This is true for all whisper models except for whisper-large-v3 where the input uses 128 Mel frequency bins instead of 80.

Actually, this sequence isn’t empty; it has some special tokens like the BOS token.